Key Features of Azure AI Foundry and How to Use Them

Share Blog

As artificial intelligence becomes central to digital transformation, enterprises need platforms that operationalize AI at scale — with agility, security, and performance. Microsoft’s Azure AI Foundry, launched in 2024, is an end-to-end, enterprise-grade platform designed to democratize and accelerate AI innovation.

Positioned as an advancement over legacy AI/ML platforms, Azure AI Foundry is a modular, integrated stack for developing, tailoring, managing, and deploying AI models and agents within Microsoft’s trusted Azure ecosystem.

IDC projects global AI spending to exceed $300B by 2026, growing at a CAGR of 27%. Yet, over 80% of AI projects still fail to scale due to infrastructure complexity, governance gaps, and poor data readiness. Azure AI Foundry directly addresses these challenges.

Industry Context: Why Azure AI Foundry Really Matters

The enterprise AI industry is experiencing explosive growth:

- As per IDC, worldwide expenditure on AI-based systems will top $300 billion by 2026, expanding at a CAGR of 27%.

- A survey conducted by Gartner in 2024 showed that 63% of businesses are testing GenAI, and 19% are already in production.

- Yet more than 80% of AI projects continue to fail to scale up from pilots because of infrastructure complexity, governance loopholes, and a lack of data readiness.

Azure AI Foundry confronts these issues head-on by providing efficient, enterprise-grade, and modular architecture for industrial-strength deployment of AI, positioning itself as an attractive solution for companies across industries.

Major Components & Capabilities

1. Model Catalog & Customization Framework

- Access enterprise-grade foundation models from OpenAI, Meta (LLaMA), Microsoft Research, Hugging Face, and more:

- GPT-4 Turbo (OpenAI)

- LLaMA 3 (Meta)

- Phi-3 (Microsoft)

- Customize with fine-tuning, LoRA, PEFT, or retrieval-augmented generation (RAG).

- Support for multimodal models (text, vision, speech).

- Built-in governance → version control, explainability, and compliance integration with Azure ML & Fabric.

Example: A manufacturer fine-tunes GPT with equipment maintenance logs using RAG, creating a predictive maintenance chatbot.

2. Agentic AI Development Environment

- Agent Framework SDKs → Build goal-driven agents.

- Azure Open Agents → Orchestrate multi-step tasks.

- Plugin & Tool Layer → Connect APIs and enterprise tools directly into agents.

Example: A financial services firm builds an autonomous agent to generate ESG compliance reports across data silos.

Gartner predicts 70% of new enterprise apps will use autonomous AI agents by 2028.

3. Unified Data Fabric Integration

- Native integration with Microsoft Fabric for:

- Real-time pipelines (OneLake, Synapse)

- Semantic modeling & governance

- Delta Lake-based transactional storage

- Cuts data wrangling time (normally 60–70% of AI projects).

Use case: A retailer ingests POS data into Fabric, uses Foundry to build recommendation engines, and pushes insights into Power BI dashboards in real time.

4. AI Safety, Security & Governance Hub

- Responsible AI tools: toxicity filters, hallucination checks, prompt injection protection.

- Governance features: audit trails, model cards, compliance dashboards.

- Zero-trust security: Integrated with Azure Active Directory.

Microsoft aligns Foundry with EU AI Act, NIST RMF, and ISO/IEC 23894 — making it viable for regulated industries like finance, healthcare, and government.

5. Multi-Cloud & On-Premises Flexibility

- Hybrid deployment with Azure Stack

- Edge inferencing via Azure Arc + IoT modules

- Multi-cloud APIs & containerized deployment

Example: An oil & gas firm trains models in the cloud, deploys them offshore with Azure Arc, and syncs periodically to HQ.

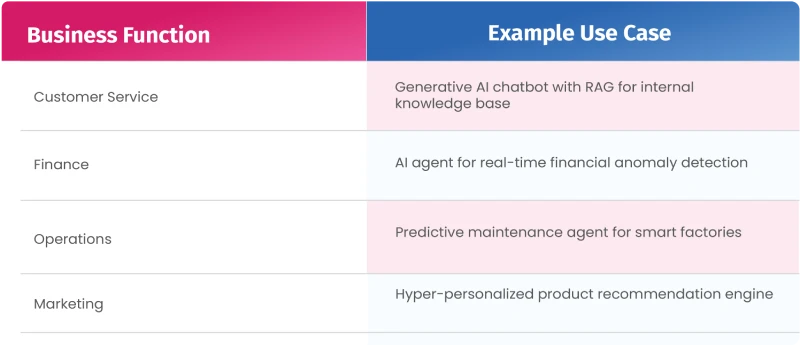

A Snapshot: What It Can Deliver to Organizations

Industry Use Cases

Finance → Fraud detection, compliance reporting, wealth management advisors

- Foundry reduces false positives in fraud detection by up to 35%.

Healthcare → Summarization agents, EHR search, patient chatbots

- Early adopters cite 3x faster documentation and 60% less admin workload.

Manufacturing → Predictive maintenance, quality control, supply chain optimization

- Foundry enables 15–25% downtime reduction via real-time anomaly detection.

Retail → Personalized marketing, dynamic pricing, demand forecasting

- Retailers report a 20% lift in click-through rates using tuned models.

A Step-by-Step Guide: Getting Started with Azure AI Foundry

Azure AI Foundry empowers businesses with a complete lifecycle platform—from model choice to deployment. There is, however, a need for effective planning, stakeholder alignment, and joining of technical as well as strategic components to succeed in onboarding an AI Foundry. We have charted out a step-by-step roadmap to begin:

1) Determine Organizational AI Maturity and Readiness

Prior to approaching any technical deployment, determine the organization’s current AI and data infrastructure state.

Key Considerations:

- Do you have clean, structured, accessible data?

- What is your AI use-case portfolio: POCs, MVPs, production systems?

- Are your data scientists, ML engineers, and business units aligned on AI strategy?

- Are there existing MLOps or DevOps pipelines in place?

Tools & Resources:

- Use Microsoft’s AI Maturity Assessment Toolkit.

- Conduct a gap analysis using Azure’s Well-Architected Framework for AI.

Outcome:

It identifies your data governance, tooling, skill, and culture foundation gaps, with realistic expectations and success metrics.

2) Specify Strategic AI Use Cases

Not all problems are ideal for foundation models or agent-based automation. Begin with clear, high-impact cases.

Use Case Types:

How to Prioritize:

- Apply the ICE framework (Impact, Confidence, Effort)

- Assess ROI potential, compliance sensitivity, and availability of data

Outcome:

Well-scoped problem descriptions and use case blueprints to develop with Azure AI Foundry.

3) Configure the Azure AI Foundry Environment

This is where the necessary services are provisioned, and the Foundry infrastructure set up.

Prerequisites:

- Azure subscription (Enterprise or Professional)

- Microsoft Fabric access (if native data integration is being used)

- Azure CLI and SDKs

Setup Steps:

- Provision Azure AI Foundry Service through the Azure Portal.

- Associate your subscription with Azure Fabric, Azure Machine Learning, and Azure OpenAI services.

- Turn on Model Catalog and set up model access (OpenAI, Hugging Face, Microsoft Phi models).

- Set up role-based access control (RBAC) for various stakeholders:

a) Data Scientists

b) Business Analysts

c) AI Engineers

d) Compliance Officers

Result:

A completely functional AI Foundry environment connected to your own Azure resources.

4) Ingest and Prepare Enterprise Data

Data is fuel for AI. Data integration and preparation are usually the most time-consuming but most important step.

a) Integration Tools:

- Microsoft Fabric (for OneLake, Dataflows, Pipelines)

- Azure Synapse (for data warehousing and analytics)

- Azure Data Factory (for ETL)

- Power BI Data Marts (for visualization)

b) Data Preparation Tasks:

- Data cataloging and classification

- Duplicate and null removal

- Semantic modeling

- PII tagging and sensitivity labeling

Best Practices:

Utilize Delta Lake architecture for versioned, scale-out data lakes.

Embrace data mesh principles for domain ownership and decentralization.

Result:

Clean, governed, and discoverable data assets that are model-ready for training or inference.

5) Select and Tailor Foundation Models

Azure AI Foundry offers a curated Model Catalog with access to:

- Microsoft Phi-3

- Meta LLaMA 3

- OpenAI GPT-4 Turbo

- Mistral, Cohere, and Hugging Face models

Customization Road:

- Prompt Engineering: Suitable for quick prototyping and chat interfaces.

- Fine-Tuning: Utilize Azure ML or Hugging Face Trainer to fine-tune models on your own proprietary data.

- LoRA / PEFT: Suitable for model adaptation with sparse data.

- RAG (Retrieval-Augmented Generation): Integrate vector search with LLMs for real-time question answering.

Tools:

- Azure Machine Learning Studio

- Azure AI Studio (low-code model customization)

- LangChain, Semantic Kernel, and Microsoft’s PromptFlow

Outcome:

A fine-tuned, customized, or augmented model that is applicable to your unique business logic and vocabulary.

6) Develop Autonomous Agents using Azure AI Agent Framework

Azure AI Foundry Agent SDK enables developers to create goal-based, multi-step AI agents.

Components:

- Planner Module: Converts user input to structured plans

- Memory Store: Maintains short- and long-term context

- Tool Integration: APIs, databases, RPA tools, Power Automate

- Execution Engine: Executes the plan step by step

Example:

An HR agent can automatically collect employee sentiment information, summarize insights, and deliver reports to department heads weekly.

Tips:

- Use pre-built workflows with Azure Open Agents.

- Specify safety rails and constraints for agents (blacklisted actions, rate limits).

Outcome:

Autonomous agents that can be deployed, automating business processes and enhancing human productivity.

7) Enforce Governance, Safety, and Monitoring:

Responsible AI is not a choice, it’s a requirement, particularly in the context of changing regulations such as the EU AI Act.

Foundry Governance Features:

- Responsible AI Dashboard with metrics for safety, fairness, explainability, and privacy.

- Policy enforcement with Azure Purview and Defender for Cloud.

- Prompt injection and jailbreak detection controls.

- Model cards and audit logs for explainability.

Tips:

- Appoint a Responsible AI Officer in your governance team.

- Regular bias and performance audits.

Outcome:

A secure, compliant, and auditable AI system that satisfies both enterprise and regulatory requirements.

8) Deploy, Monitor, and Continuously Improve

AI is not deployed once and use always tool—ongoing monitoring and learning are essential.

Deployment Pipelines:

- Utilize Azure DevOps or GitHub Actions for CI/CD.

- Implement model versioning and rollback triggers.

Monitoring Tools:

- Azure Monitor and App Insights for latency and uptime.

- Model Drift Detection and alerting using Azure ML Monitoring.

Feedback Loops:

Support collection of user feedback (thumbs up/down, comments).

Periodically retrain or fine-tune models on fresh data.

Outcome:

Robust, performing, and constantly better AI applications integrated into your enterprise workflows.

In a Nutshell:

Azure AI Foundry is more than a platform — it’s a catalyst for enterprise AI adoption. By combining models, agents, data fabric, and governance into a single ecosystem, it helps organizations:

- Scale AI securely and responsibly

- Shorten deployment timelines

- Unlock efficiencies across industries

- Balance innovation with compliance

Whether you’re piloting AI or scaling enterprise-wide adoption, Azure AI Foundry offers the tools, guardrails, and integrations to future-proof your AI journey.